Serverless computing: is it the future of scalable applications?

Serverless computing is one of the hottest trends in the world of cloud technology. Despite the misleading name, it doesn’t mean there are no servers – it means that developers and companies don’t have to manage servers themselves, as that is handled by the cloud provider. In the serverless model, we create and run application code without worrying about infrastructure, scaling, or server maintenance. This solution is tempting with its promise of unlimited scalability, lower costs, and faster time-to-market, but it also has its limitations.

Let’s take a business perspective to explore what serverless is, how it works, what benefits it brings, and what challenges it entails, as well as which platforms offer such services. Finally, we’ll consider whether serverless is truly the future of scalable applications and when it makes (or doesn’t make) sense to use it.

What is serverless computing?

Serverless computing is a cloud processing model in which the entire server infrastructure is managed by the service provider, and the user pays only for the actual use of computing resources. This means that a company using serverless doesn’t reserve servers or computing power in advance – resources are allocated dynamically when the code is executed and released when no longer needed (the “scale to zero” mechanism).

In practice, the most common variant of serverless is Function as a Service (FaaS), where the application is divided into small functions triggered by various events (e.g. API calls, file uploads, database events, time-based schedules, etc.). The developer writes the function code and defines the triggers, while everything else – from server provisioning, through automatic scaling, to OS updates – is handled by the cloud provider.

A key feature of serverless is the pay-as-you-go billing model. Unlike traditional hosting (even in the cloud), where we pay for rented virtual machines regardless of their usage, in serverless the fee is charged only for the execution time of the code and the resources used (e.g. RAM) during that time. When functions aren’t running, they incur no costs – it’s like switching from a subscription plan to paying only for used minutes or megabytes.

It’s worth emphasizing that “serverless” does not mean the absence of servers, but rather the absence of responsibility for them on the user’s side. The provider (e.g. AWS, Azure) maintains physical servers in its infrastructure and automatically assigns them to functions as needed. For the developer, servers are “invisible” – they focus on writing application code and business logic, while the scaling “magic” happens behind the scenes.

How does the serverless model work?

Serverless models, such as FaaS, operate on event-driven architecture and containers. When a trigger (event) is detected – e.g. an HTTP request to an API, a new record in a database, or a scheduled task at a specific time – the serverless platform executes the appropriate function.

In the background, the provider creates an isolated execution environment (a container or lightweight virtualization) for the function, loads the code and its dependencies, and then runs the function. Once the function finishes, the environment may be frozen or destroyed to free up resources. If the same function is triggered again shortly after, the platform can reuse the already warmed-up environment (warm start), reducing response latency.

Serverless is inherently scalable and flexible. The platform spins up as many parallel instances of a function as needed to handle incoming events. During peak loads, hundreds or thousands of function instances may run simultaneously, while in idle times, none are running (resulting in no cost).

In practice, using serverless means deploying function code to a platform (e.g. via CLI or web panel) and defining triggers for them. All traditional administration – server purchasing or configuration, OS installation, cluster scaling, machine monitoring – is outsourced to the service provider. This allows teams to deliver new functionality faster, skipping the infrastructure setup phase.

Benefits of serverless: scalability, costs, and time-to-market

The serverless model offers many benefits important from a business perspective. The key ones include:

-

Automatic scalability – Serverless functions scale automatically in response to load. The platform dynamically adjusts computing power to the number of requests, handling traffic even during sudden spikes in demand. Developers don’t have to plan capacity or manually add servers – the app can scale from zero to millions of users.

-

Cost efficiency – Serverless often lowers infrastructure costs, especially for apps with variable or intermittent loads. We only pay for the execution time and resources used, avoiding idle costs. This reduces operational expenses – no servers to maintain means savings on hardware, energy, admin, and licenses.

-

Faster time-to-market – With serverless, teams can create, test, and deploy new app features faster. No need to configure infrastructure means developers focus on business logic, speeding up development.

-

No infrastructure maintenance – Serverless means outsourcing infrastructure management to the provider. Companies don’t need admin teams for OS updates, network setup, etc. The provider also ensures built-in high availability and fault tolerance.

In summary, serverless offers “unlimited” scalability, pay-for-usage only, and fast feature delivery, making it attractive for companies seeking rapid growth and cost optimization. No wonder this architecture has gained popularity – the global serverless market was valued at around $8B in 2022 and is projected to exceed $50B by 2031.

Limitations and challenges of the serverless model

Despite many advantages, serverless also comes with limitations and potential downsides. The most important include:

-

Cold starts – To efficiently manage resources, serverless platforms suspend or shut down unused functions. When a new request arrives after a while, the function must be restarted, causing a delay in the first response. This can be problematic for real-time systems.

-

Vendor lock-in – Strong dependence on a specific cloud provider and its ecosystem. Migration can be costly and time-consuming, especially if the app uses vendor-specific services.

-

Harder debugging and monitoring – The function’s runtime is managed by the provider, limiting access to system logs or full debugging capabilities. It requires a shift in approach to observability.

-

Runtime environment limitations – Execution time limits (e.g. max 15 mins for AWS Lambda), available memory, code package sizes, or programming languages. Not every app fits within these constraints.

-

Costs under heavy and continuous load – Over time, with very high and predictable traffic, serverless can be more expensive than traditional approaches (e.g. containers or dedicated infrastructure).

To sum up: serverless isn’t a silver bullet. While solving many problems (scaling, admin), it also introduces new challenges – from cold starts to limited control and potential lock-in. Choosing this architecture should be based on app requirements and a conscious evaluation of trade-offs.

Top serverless platforms

Here’s an overview of the most popular solutions on the market:

-

AWS Lambda – The most mature and well-known platform. Supports many languages (Python, Node.js, Java, Go, etc.). Deep integration with the AWS ecosystem. Execution time limit: 15 minutes.

-

Azure Functions – Microsoft’s counterpart to Lambda. Works with C#, JavaScript, Python, and more. Good integration with Microsoft tools and Azure services. Time limit: 10 minutes (consumption plan).

-

Google Cloud Functions – Google’s platform. Supports Node.js, Python, Go, and others (via containers in Gen2). Natural choice for those already using GCP. Limit: 9 minutes.

-

Cloudflare Workers – Runs functions close to users – at the edge. Ideal for low latency. Very fast cold starts but a limited runtime environment (e.g. no full Node.js).

-

Vercel Serverless Functions – Super simple backend for web apps (e.g. Next.js). Just put function files in the /api folder. Infrastructure is completely hidden.

-

Netlify Functions – Similar to Vercel, but in the Netlify environment. Integrates with Jamstack, static sites, and frontends. Great for simple automations and backend logic.

-

OpenFaaS – Open-source alternative. Can run on your own Kubernetes or locally. Ideal for companies wanting to control the environment or avoid vendor lock-in.

Other solutions include:

- IBM Cloud Functions – Based on Apache OpenWhisk. Supports many languages and integrates with IBM Cloud.

- Oracle Cloud Functions – Based on Fn Project, aimed at Oracle Cloud customers.

- Red Hat OpenShift Serverless (Knative) – Enables function development based on containers in Kubernetes/OpenShift environments.

Each of these platforms has a slightly different profile. Big clouds (AWS, Azure, GCP) offer broad flexibility and integrations, Vercel/Netlify aim for frontend simplicity, and Cloudflare focuses on edge computing.

Practical use cases

-

Microservices and modular architecture – Breaking apps into small functions allows independent scaling and deployment of components. Ideal for DevOps teams and companies building highly available apps.

-

Data processing and ETL – Automatic reactions to file uploads, stream events, scheduled data transformations. Great in BI, IoT, and data pipelines.

-

Backend for web/mobile apps – Fast and scalable APIs for frontend communication. Often combined with services like Firebase, Supabase, Auth0, or AWS Amplify.

-

Automations and batch jobs – Cron jobs, system integrations, report sending, webhooks. Dozens of processes can be automated safely without managing servers.

Summary and the future of serverless

Serverless computing is a powerful approach to building scalable applications. Its popularity is growing, and providers are continually removing limitations like cold starts or lock-in. It’s especially attractive to startups, innovation teams, and companies looking to experiment quickly with low entry costs.

But not everything can (or should) be serverless. For apps requiring low latency, continuous processing, or custom environments, traditional containers or dedicated infrastructure may be better.

Serverless is the perfect choice when the load is variable and deployment speed is critical. In practice, many companies use hybrid approaches – part of the system runs as functions, part in containers or VMs.

This is no passing trend. Serverless is already a key part of modern cloud architectures and its importance will only grow in the coming years.

Sources:

Articles

Serverless computing: is it the future of scalable applications?

What is serverless computing and how does it help build scalable applications? Pros, cons, applications and an overview of the major platforms.

Engineering approach in practice - CraftByte Studio standards

How we develop software with engineering precision: architecture, CI/CD, integration testing. No buzzwords, no rubbish. Check.

Scalable architecture from the ground up

Learn how to build a scalable SaaS architecture with best practices in microservices, event-driven design, database scaling, and performance optimization.

How to Effectively Automate Business Processes with Software

Discover how business process automation can streamline workflows, reduce costs, and improve efficiency using AI, RPA, and workflow automation tools.

Key Global IT Trends Shaping the Future of Business

Top IT Trends 2025! AI, Automation, Cloud, Cybersecurity & the Future of Technology Shaping Innovation in Modern Business!

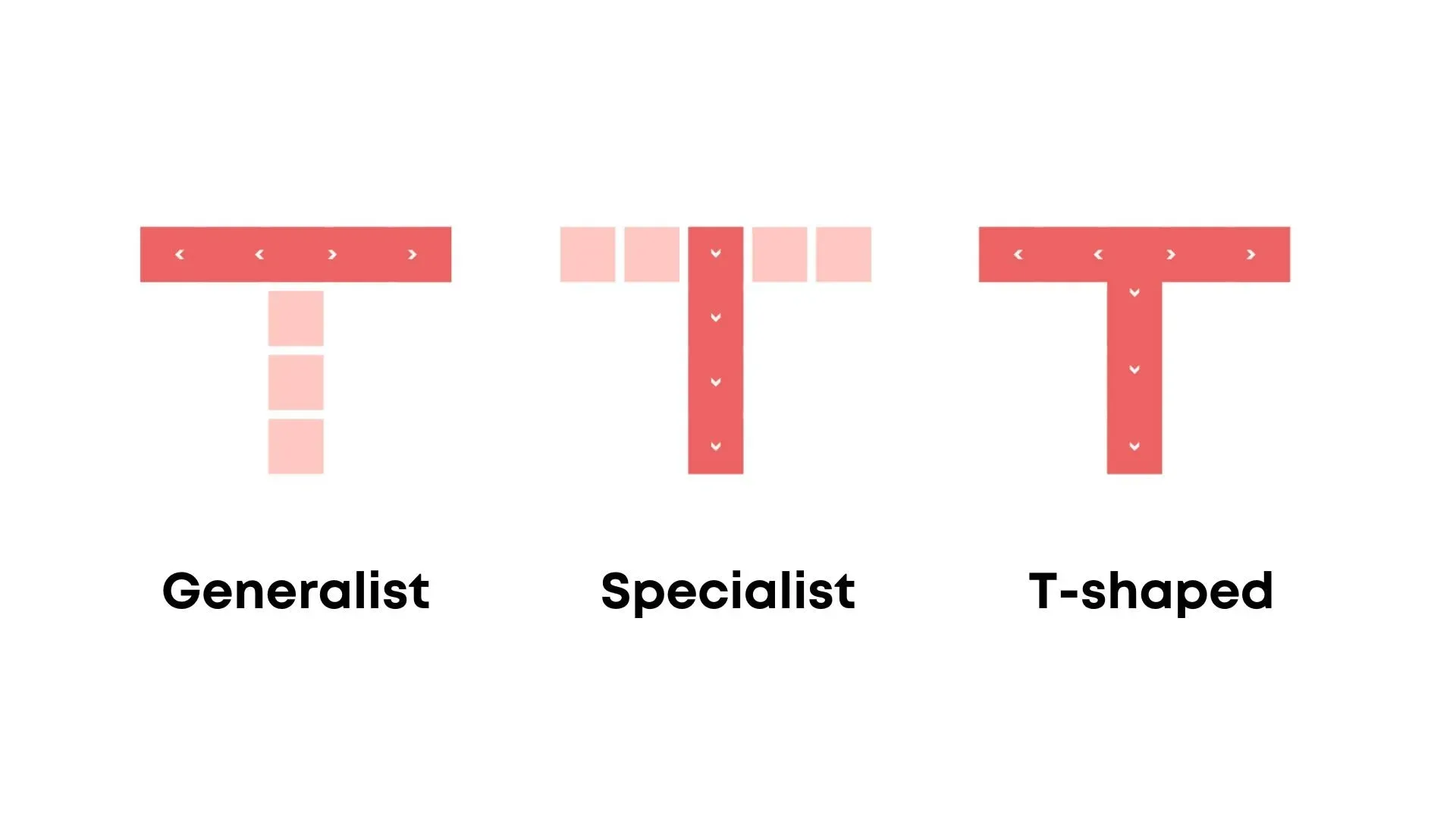

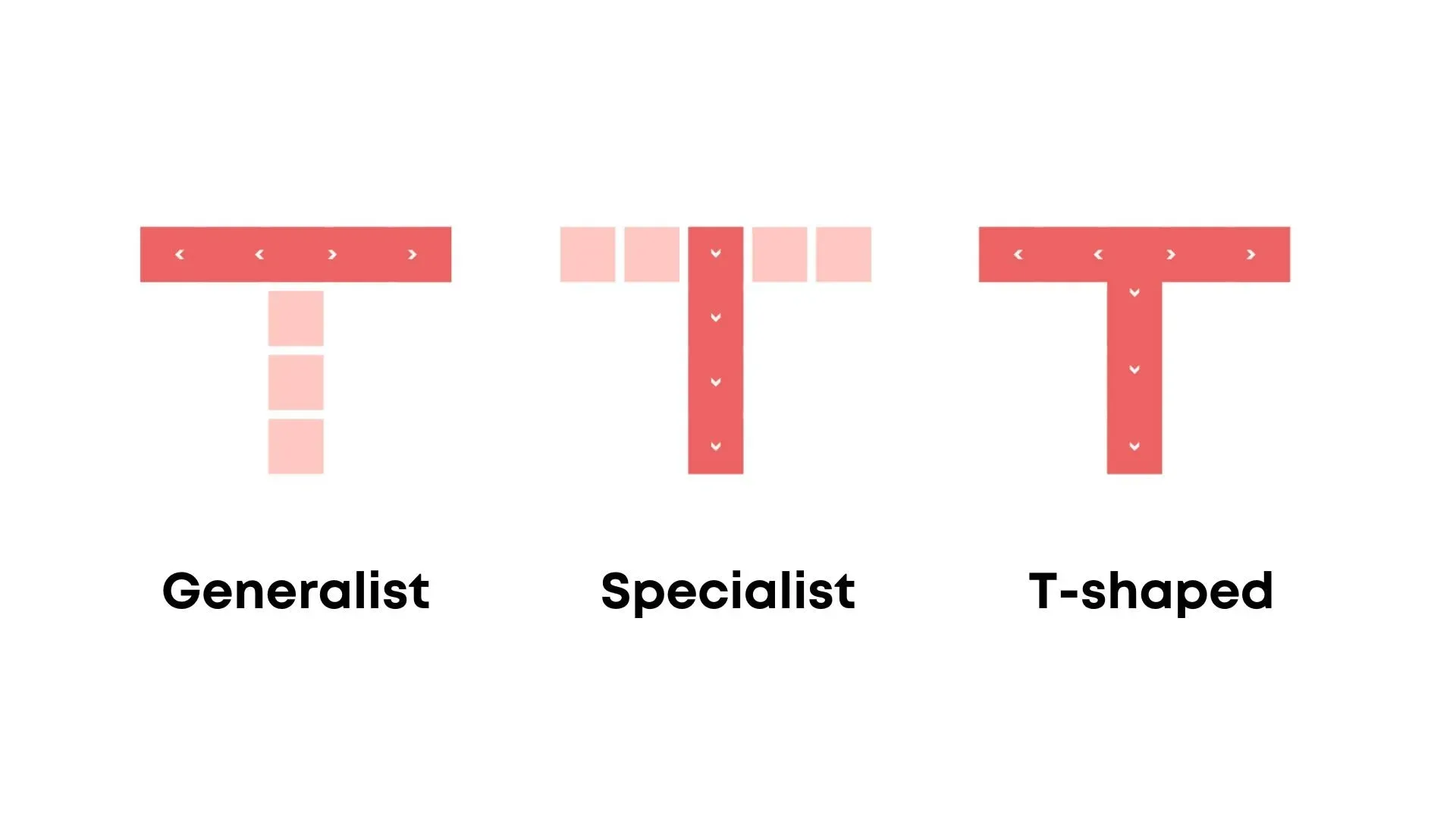

Specialist vs. Generalist in IT

Specialists excel in deep expertise, while generalists thrive on adaptability. The best developers blend both for long-term success in an AI-driven world.

Custom Software: When Does It Make Sense?

Struggling with off-the-shelf software limitations? Discover when custom solutions make sense for scalability, efficiency, and long-term savings.